The safety practices of some of the world’s biggest artificial intelligence companies are “far short” of global standards, a new study has found, with top Chinese companies DeepSeek and Alibaba Cloud among the worst offenders.

According to a new edition of Future of Life Institute’s AI safety index, released on Wednesday, a safety evaluation conducted by an independent panel of experts found that while the companies were rushing to develop superintelligence, none had a robust strategy for controlling such advanced systems.

“All of the companies reviewed are racing toward AGI (Artificial General Intelligence)/ superintelligence without presenting any explicit plans for controlling or aligning such smarter-than-human technology, thus leaving the most consequential risks effectively unaddressed,” the report noted.

Also on AF: Thai Police Seize $300m in Assets ‘Linked to Scam Centre Bosses’

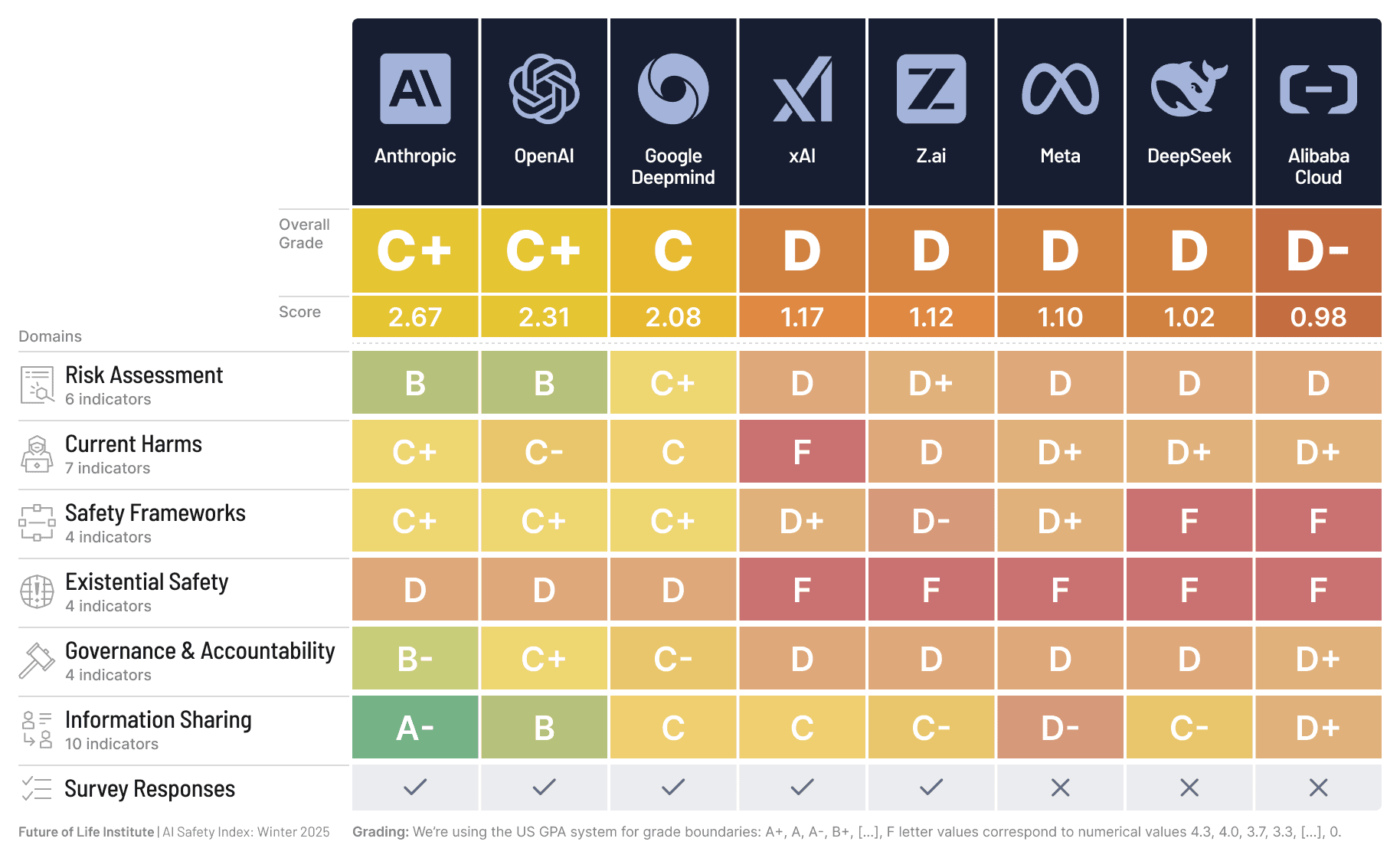

The report ranked leading AI firms including Anthropic, OpenAI, Google DeepMind, xAI, Meta and key Chinese outfits Z.ai, DeepSeek and Alibaba Cloud after testing them on parameters such as risk assessment, current harms, safety frameworks, existential safety, governance and accountability, and information sharing.

Not a single firm managed to score a grade higher than ‘C’, according to the report. US firm Anthropic ranked highest with a marginally higher score than peers OpenAI and Google DeepMind.

All Chinese firms, meanwhile, scored a ‘D’ grade, with Alibaba Cloud faring the worst at D-minus.

DeepSeek and Alibaba Cloud both received a failing score on safety frameworks — a measure assessing how companies had addressed known risks and actively engaged in searching for other potential threats.

DeepSeek, Z.ai and Alibaba Cloud had no publicly available safety framework, the report said.

Every tested AI firm ranked very poorly, meanwhile, at assessing existential safety, a measure that “evaluates whether companies have published comprehensive, concrete strategies for managing catastrophic risks” from AGI or superintelligence.

AGI refers to a level of AI with human-like abilities to understand or learn any intellectual task that a human being can. Such AI does not exist for now, but companies like OpenAI have said that they could achieve that level by the end of the decade.

“Existential safety remains the sector’s core structural failure, making the widening gap between accelerating AGI/superintelligence ambitions and the absence of credible control plans increasingly alarming,” the report said.

“It’s horrifying that the very companies whose leaders predict AI could end humanity have no strategy to avert such a fate,” David Krueger, Assistant Professor at Université de Montreal and a review on the panel, said in a press release.

Despite their failures, however, the report said that reviewers “noted and commended” several safety practices of Chinese companies mandated under Beijing’s regulations.

“Binding requirements for content labelling and incident reporting, and voluntary national technical standards outlining structured AI risk-management processes, give Chinese firms stronger baseline accountability for some indicators compared to their Western counterparts,” it said.

It also noted that Chinese firm Zhipu’s Z.ai had indicated that it was developing an existential-risk plan.

‘AI less regulated than restaurants’

A Google DeepMind spokesperson told Reuters the company will “continue to innovate on safety and governance at pace with capabilities” as its models become more advanced.

xAI responded to Reuters’ queries with “Legacy media lies”, in what seemed to be an automated response.

Anthropic, OpenAI, Meta, Z.ai, DeepSeek, and Alibaba Cloud did not immediately respond to requests for comment on the study.

The Future of Life Institute’s report comes at a time of heightened public concern about the societal impact of smarter-than-human systems capable of reasoning and logical thinking, after several cases of suicide and self-harm were tied to AI chatbots.

“Despite recent uproar over AI-powered hacking and AI driving people to psychosis and self-harm, US AI companies remain less regulated than restaurants and continue lobbying against binding safety standards,” said Max Tegmark, MIT professor and Future of Life president.

The AI race also shows no signs of slowing, with major tech companies committing hundreds of billions of dollars to upgrading and expanding their machine learning efforts.

In October, a group including scientists Geoffrey Hinton and Yoshua Bengio called for a ban on developing superintelligent artificial intelligence until the public demands it and science paves a safe way forward.

“AI CEOs claim they know how to build superhuman AI, yet none can show how they’ll prevent us from losing control – after which humanity’s survival is no longer in our hands. I’m looking for proof that they can reduce the annual risk of control loss to one in a hundred million, in line with nuclear reactor requirements. Instead, they admit the risk could be one in ten, one in five, even one in three, and they can neither justify nor improve those numbers,” Stuart Russell, Professor of Computer Science at UC Berkeley and a reviewer on the panel, said in public statements.

“It’s possible that the current technology direction can never support the necessary safety guarantees, in which case it’s really a dead end.”

- Vishakha Saxena, with Reuters

Also read:

DeepSeek Researcher Pessimistic About AI’s Impact on Humanity

DeepSeek Sharing User Data With China Military, Intelligence: US

Apple Taps Alibaba to Bring AI to Chinese iPhones

‘Big Short’ Wagers $1-Billion Bet That ‘AI Bubble’ Will Burst

AI Bubble Popped by MIT Report Noting Dismal Business Impacts

Altman Agrees the AI Market is a ‘Bubble’ That Could Soon Burst

AI is ‘Effectively Useless,’ Veteran Analyst Warns

Spotlight on Big Tech’s Power and Water Use Amid AI Surge`

Amid Fears Of An AI Bubble, Indian Equities Become Surprise Hedge

Carbon Removal Credits in Short Supply As AI Firms Step Up Demand