Chinese regulators are looking to manage growing risks from a rapid rise of artificial intelligence tools designed to simulate human personalities amid increasing global cases of self-harm, mental health disorders and addictions linked to the technology.

China’s cyber regulator on Saturday issued draft rules for public comment that would tighten oversight of such technology, especially tools that engage users in emotional interaction.

The draft lays out a regulatory approach that would require providers to warn users against excessive use and to intervene when users show signs of addiction.

Also on AF: US Delays Fresh Chip Tariffs Against China to Keep The Peace

Providers will, for instance, need to give ‘pop-up’ reminders to users after two hours of continuous AI interaction that they are communicating with AI and not a human. Service providers could also be required to issue similar reminders when users first log in to their AI chatbots.

Under the proposal, service providers would also be required to assume safety responsibilities throughout the product lifecycle and establish systems for algorithm review, data security and personal information protection.

The draft rules mandate security assessments for AI chatbots with more than 1 million registered users or over 100,000 monthly active users, according to a CNBC report citing the draft document.

They also set strict guidelines for AI chatbot use by minors, with responsibility for those interactions lying solely on service providers.

The rules say providers will be required to establish whether or not a user is a minor even if they do not explicitly disclose their age. In case of any doubt, providers will be required to treat the user as a minor and implement guardrails accordingly, such as requiring guardian consent to use AI for emotional companionship and imposing time limits on usage.

Tackling self-harm, gambling

The draft also targets potential psychological risks. Providers would be expected to identify user states and assess users’ emotions and their level of dependence on the service. If users are found to exhibit extreme emotions or addictive behaviour, providers should take necessary measures to intervene, it said.

The measures set content and conduct red lines for AI chatbots, stating that services must not generate content that endangers national security, spreads rumours or promotes violence or obscenity.

They also must not produce text, audio or visual content that would encourage or “glamourise” suicide or self-harm. Specific mentions of suicide by a user should immediately trigger a human to take over the conversation and also contact a guardian or emergency contact, draft rules say, according to CNBC.

The draft rules further ban “verbal abuse or emotional manipulation that could harm users’ physical or mental health or undermine their dignity,” according to state media.

They also ban generation of content that “spreads rumours disrupting economic or social order, or involves pornography, gambling, violence or incitement to crime..

The move underscores Beijing’s effort to shape the rapid rollout of consumer-facing AI by strengthening safety and ethical requirements.

Some of China’s biggest tech firms, including Baidu, Tencent and ByteDance have tapped into the AI companionship trend.

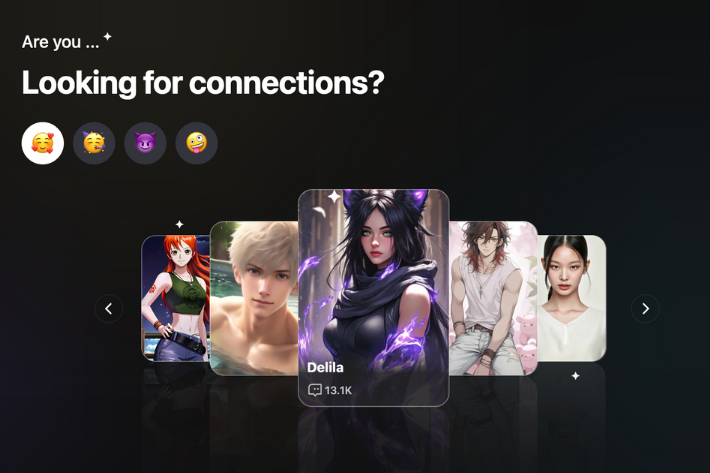

The use of AI for companionship has rapidly increased online, with people turning to chatbots for everything from friendship and love to therapy and even creating a likeness of loved ones that were no longer alive.

Countries across the world, including the US, Japan, China, South Korea, Singapore and India have seen a swift rise in the use of AI companions. In the US, specifically, chatbots have been linked to multiple cases of suicide and mental health disorders such as “AI psychosis”.

Still, if the the draft rules proposed by Chinese regulators are imposed, they would be the first-of-their-kind regulations around AI chatbots from any country in the world.

The regulation will be open for public comments until January 25 next year.

- Vishakha Saxena, with Reuters

Also read:

Chinese Firms Fare Worst As Study Finds AI Majors Fail Safety Test

Bumper IPO by Another China Chip Firm Raises Hype Worry

The Idea of AI Super-Intelligence is a ‘Fantasy’

DeepSeek Researcher Pessimistic About AI’s Impact on Humanity

Malaysia Says It’ll Ban Social Media For Children From 2026

‘Big Short’ Wagers $1-Billion Bet That ‘AI Bubble’ Will Burst