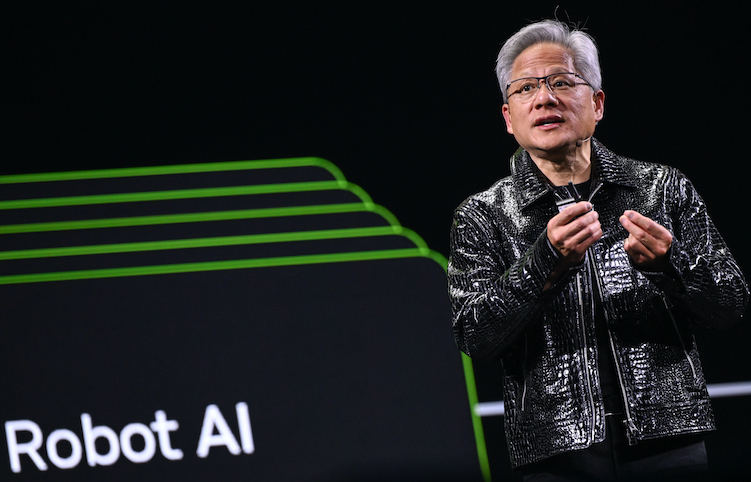

Nvidia CEO Jensen Huang is already talking up his next generation of computer chips, saying on Monday they can deliver five times the artificial-intelligence computing power of the group’s previous chips when serving chatbots and other AI apps.

In a speech in at the Consumer Electronics Show in Las Vegas, Huang – ever the salesman – said the company’s next generation of chips is in “full production.”

The boss of the world’s most valuable company revealed new details about chips that will arrive later this year. His executives have told Reuters the new chips are already in the company’s labs being tested by AI firms, as Nvidia faces increasing competition from rivals, as well as its own customers.

ALSO SEE: Trump’s Venezuela Strike ‘May Embolden China’s Territorial Claims’

Vera Rubin ‘pods’

The Vera Rubin platform, made up of six separate Nvidia chips, is expected to debut later this year, with the flagship server containing 72 of the company’s graphics units and 36 of its new central processors.

Huang showed how they can be strung together into “pods” with more than 1,000 Rubin chips and said they could improve the efficiency of generating what are known as “tokens” – the fundamental unit of AI systems – by 10 times.

To get the new performance results, however, Huang said the Rubin chips use a proprietary kind of data that the company hopes the wider industry will adopt.

“This is how we were able to deliver such a gigantic step up in performance, even though we only have 1.6 times the number of transistors,” Huang said.

Competition on chatbot responses

While Nvidia still dominates the market for training AI models, it faces far more competition – from traditional rivals such as Advanced Micro Devices as well as customers like Alphabet’s Google – in delivering the fruits of those models to hundreds of millions of users of chatbots and other technologies.

Much of Huang’s speech focused on how well the new chips would work for that task, including adding a new layer of storage technology called “context memory storage” aimed at helping chatbots provide snappier responses to long questions and conversations.

Nvidia also touted a new generation of networking switches with a new kind of connection called co-packaged optics. The technology, which is key to linking together thousands of machines into one, competes with offerings from Broadcom and Cisco Systems.

Nvidia said that CoreWeave will be among the first to have the new Vera Rubin systems and that it expects Microsoft, Oracle, Amazon and Alphabet to adopt them as well.

In other announcements, Huang highlighted new software that can help self-driving cars make decisions about which path to take – and leave a paper trail for engineers to use afterward.

Alpamayo software

Nvidia showed research about software, called Alpamayo, late last year, with Huang saying on Monday it would be released more widely, along with the data used to train it, so that automakers can make evaluations.

“Not only do we open-source the models, we also open-source the data that we use to train those models, because only in that way can you truly trust how the models came to be,” Huang said from a stage in Las Vegas.

Last month, Nvidia scooped up talent and chip technology from startup Groq, including executives who were instrumental in helping Alphabet’s Google design its own AI chips.

While Google is a major Nvidia customer, its own chips have emerged as one of Nvidia’s biggest threats as Google works closely with Meta Platforms and others to chip away at Nvidia’s AI stronghold.

During a question-and-answer session with financial analysts after his speech, Huang said the Groq deal “won’t affect our core business” but could result in new products that expand its lineup.

At the same time, Nvidia is eager to show that its latest products can outperform older chips like the H200, which US President Donald Trump has allowed to flow to China.

Reuters has reported that the H200 chip, which was the predecessor to Nvidia’s current “Blackwell” chip, is in high demand in China, which has alarmed China hawks across the US political spectrum.

Huang told financial analysts after his keynote that demand is strong for the H200 chips in China, and chief financial officer Colette Kress said Nvidia has applied for licences to ship the chips to China but is waiting for approvals from the US and other governments to ship them.

- Reuters with additional editing by Jim Pollard

ALSO SEE:

TSMC, Korean Firms ‘Can Send Chipmaking Tools to China Plants’

China’s AI Chipmaker Biren Jumps 76% in Latest Hong Kong IPO

Korea, China Shares Post Highest Gains in Years Amid AI Frenzy

Meta to Buy Chinese-Founded AI Startup Seen as ‘Next DeepSeek’

Bumper IPO by Another China Chip Firm Raises Hype Worry

China Now Requires Chipmakers to Use At Least 50% Local Equipment

China Looks to Tackle Addiction, Self-Harm From AI Emulating Humans

Seoul Accuses Ex-Samsung Staff of Leaking DRAM Tech to China

The Idea of AI Super-Intelligence is a ‘Fantasy’ – US Researcher